Generative Modeling

Generative modeling represents a fundamental class of machine learning models designed to create synthetic data that

closely mirrors real-world data distributions. These models find extensive application in fields such as computer vision,

security, and medicine, where their capability to map image content across domains has demonstrated significant value.

They play a crucial role in automated quality control for industrial processes, particularly in contexts with limited

defective samples due to privacy constraints or operational costs. Nevertheless, challenges such as imbalanced datasets,

mode collapse, and training instability restrict their broader applicability, especially on edge devices. To address

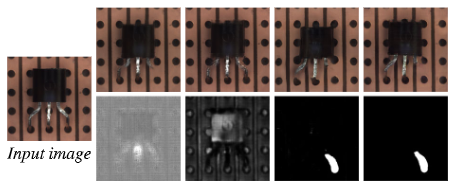

these challenges, we propose novel unpaired image-to-image (I2I) translation models capable of transforming defect-free

images into defective ones while simultaneously generating segmentation masks for defect localization. Furthermore,

combining multiple pre-trained I2I models using a binary-tree structure has been shown to enhance image synthesis quality

and optimize performance for resource-constrained environments. Inspection networks trained on synthetic data produced by

these models show improved accuracy in identifying real defects. Techniques such as weight-sharing and averaging pre-trained

model weights effectively reduce computational resource requirements while maintaining high-quality outputs. Our works also

investigate methods for deploying generative models on low-powered devices, thereby enhancing their scalability and efficiency.

These contributions represent a significant advancement in the practical and industrial applicability of generative models

by improving their performance and adaptability.

Code

https://github.com/pasqualecoscia/SyntheticDefectGeneration